The Wikipedia foundation provides dumps for its projects. Among them, you have the full history of the pages: the dump is a compressed file containing the full text of each revision of each page. As you can guess, as new revisions of the pages are added, the overall space required exponentially grows. So I told myself that contributors should avoid adding tons of very small modifications (each of them adding the content of the full page in the history).

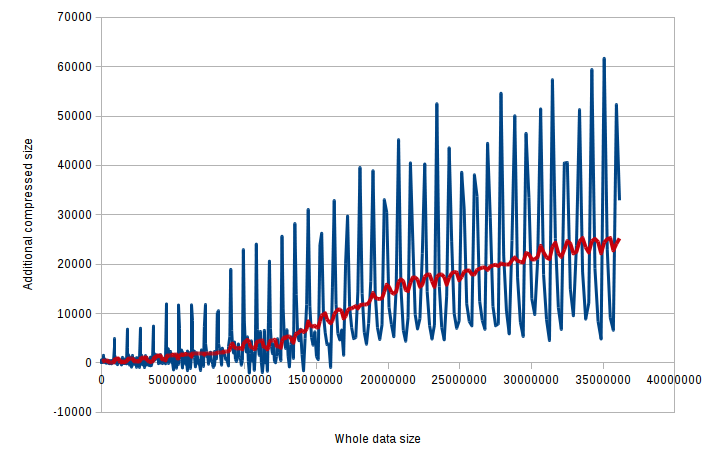

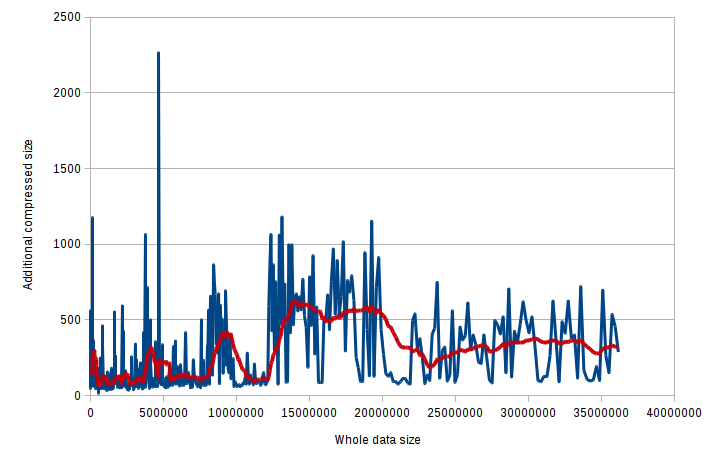

What about the compression of this history? It turns out than lzma (xz, 7zip) performs much better than bzip2. For example, for French Wikivoyage, the 7zip version is 5 times smaller than the bzip2 version. Here are some diagrams with:

- in X: the size of the whole dump for a page after a given revision was added.

- in Y: the size of the additional compressed data for this given revision.

For bzip2:

As you can see, the compression rate is decreasing.

As you can see, the compression rate is decreasing.

For lzma: Compression rate over against the full data size for bzip2:

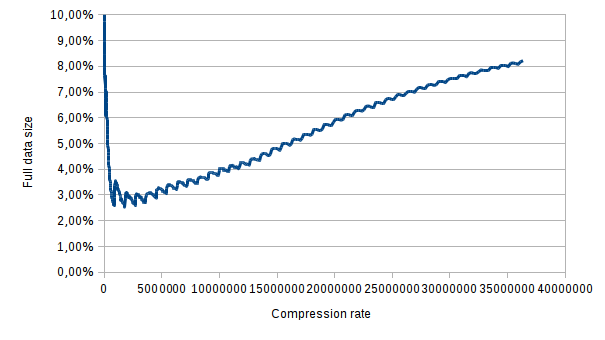

Compression rate over against the full data size for bzip2:

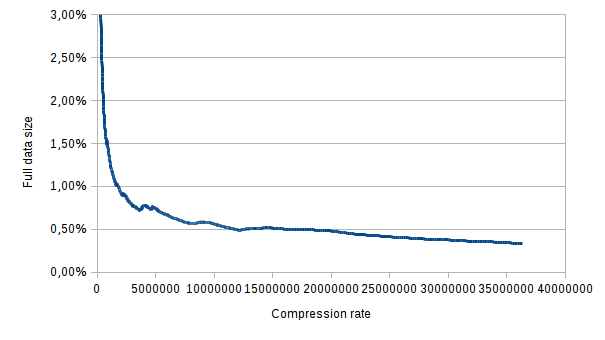

Compression rate over against the full data size for lzma:

Compression rate over against the full data size for lzma:

Conclusion: lzma handles much better text that is the very repetitive and large.

Conclusion: lzma handles much better text that is the very repetitive and large.

I expect the bzip2 dumps to grow larger and larger. The French Wikipedia dump is currently 110Go in bz2 and only 15Go in 7z.