In my previous attempt of parsing Openstreetmap data, I found parsing XML data slow. Fortunately I realized that the data was also available in Google Prococol Buffer format, and

- it’s 30-40% smaller than bzip2 XML (and you know, bzip2 requires a fair amount of CPU power)

- it’s much faster to parse: with Osmosis, reading a PBF file on my quad-core took 14 seconds, and reading the XML.bzip2 with the help of lbunzip2 (multi-thread decompressor) it took 1mn50s. Ouch!

There is a Go library to handle Protocol Buffers, so I tried to write a PBF reader in this language and could see how efficient it would be. My program worked like this:

- the main thread would read blocks from the file and pass them to thread workers using a channel

- each worker (a goroutine) would decompress the block, unmarshall the data and process it

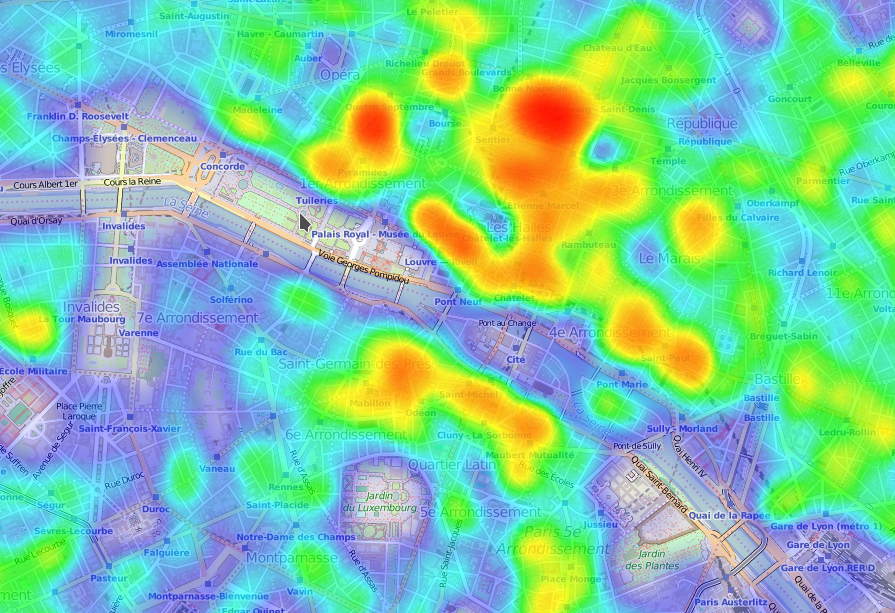

- when there would not be any block left to process, the results of the workers would be merged into a single image

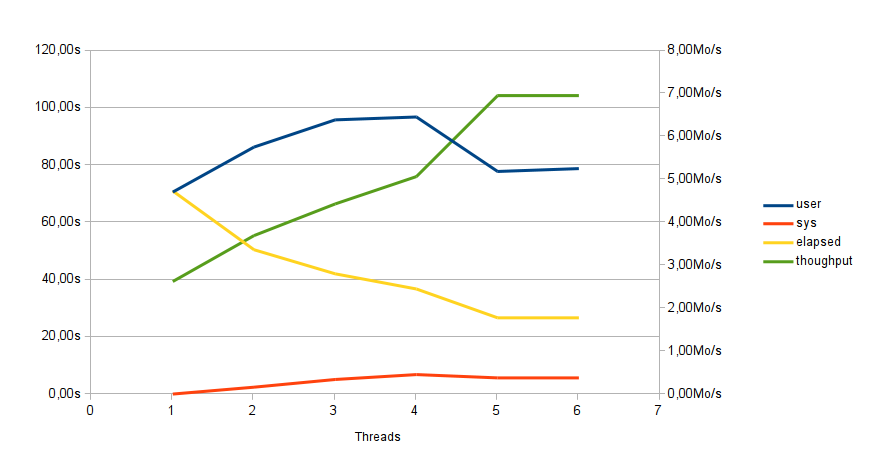

So what kind of performance did I get? I get the best result with a number of workers equal to the number of cores + 1 (so 5 workers): about 28 seconds. I cannot compare this result with Osmosis (not all the cases are handled), but it’s quite acceptable.

I find Go a nice language to use, and it compiles very very quickly. I struggled a little bit with some points, and everything is not clear yet for me also. It feels strange not to program in a OO-way. And I can’t be sure if I have to trigger tons of goroutines or use a pool of workers, if I should pass callback functions or channels.

That’s also a pity that the tremendous performance is not there yet. It’s supposed to be «close to the metal», «a language for system programming», but for the moment (after 3 years) it is not a fast as Java.